Deploying an ingress controller to an internal virtual network and fronted by an Azure Application Gateway with WAF

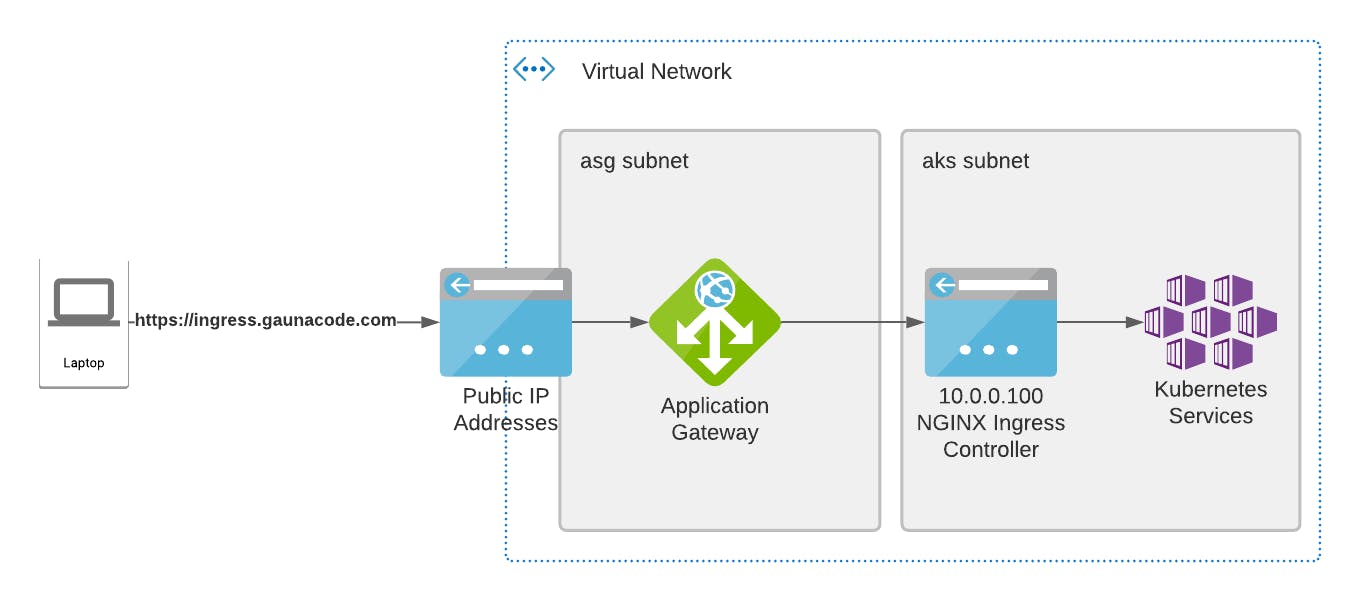

There are many ways to add a Web Application Firewall (WAF) in front of applications hosted on Azure Kubernetes Service (AKS). In this post, we’ll cover how to set-up an NGINX ingress controller on AKS, then create an Azure Application Gateway to front the traffic from a Public IP, terminate TLS, then forward traffic to the NGINX ingress controller listening on a Private IP, unencrypted.

Pre-Requisites

An AKS cluster using Azure CNI as the network plugin

A virtual network with two subnets

asgandaks. Theasgsubnet will hold the Application Gateway, and theakssubnet will hold the AKS cluster.A resource group, in this case

rg-appg-ingress-testYour AKS cluster's identity will need Network Contributor role scoped to the virtual network

Deploying NGINX ingress controller with a private IP

During this section, we’ll borrow instructions from this MSFT docs page.

First, we need a private IP address that the NGINX ingress controller will accept requests from. So, choose a private IP address and verify that it’s available. In this case, the IP address I chose is 10.0.0.100.

az network vnet check-ip-address --name vnet-ingress-test -g rg-appg-ingress-test --ip-address 10.0.0.100

Create the following file named internal-ingress.yaml with the chosen IP address.

controller:

service:

loadBalancerIP: 10.0.0.100

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

This will be used to configure the NGINX Helm chart to use the given IP address for the ingress controller.

# Create a namespace for your ingress resources

kubectl create namespace ingress

# Add the ingress-nginx repository

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

# Use Helm to deploy an NGINX ingress controller

helm install nginx-ingress ingress-nginx/ingress-nginx \

--namespace ingress \

-f internal-ingress.yaml \

--set controller.replicaCount=2 \

--set controller.nodeSelector."kubernetes.io/os"=linux \

--set defaultBackend.nodeSelector."kubernetes.io/os"=linux \

--set controller.admissionWebhooks.patch.nodeSelector."kubernetes.io/os"=linux

It may take a few minutes for the IP address to be assigned.

$ kubectl --namespace ingress get services -o wide -w nginx-ingress-ingress-nginx-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx-ingress-ingress-nginx-controller LoadBalancer 10.0.75.105 80:31026/TCP,443:30254/TCP 2m8s app.kubernetes.io/component=controller,app.kubernetes.io/instance=nginx-ingress,app.kubernetes.io/name=ingress-nginx

If it's stuck in <pending>, then likely, the Ingress controller can't reserve the static IP. Therefore, you're most likely missing the Network Contributor role on the AKS cluster's identity.

You can verify this by looking at the events for the service. bash kubectl --namespace ingress get services -o wide -w nginx-ingress-ingress-nginx-controller

Deploying two test applications

Let’s create a namespace for the sample apps.

kubectl create ns ingress-test

Deploy the first sample application.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld

namespace: ingress-test

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld

template:

metadata:

labels:

app: aks-helloworld

spec:

containers:

- name: aks-helloworld

image: mcr.microsoft.com/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "Welcome to Azure Kubernetes Service (AKS)"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld

namespace: ingress-test

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: aks-helloworld

EOF

Now, deploy the second test application in the same namespace.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: ingress-demo

namespace: ingress-test

spec:

replicas: 1

selector:

matchLabels:

app: ingress-demo

template:

metadata:

labels:

app: ingress-demo

spec:

containers:

- name: ingress-demo

image: mcr.microsoft.com/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "AKS Ingress Demo"

---

apiVersion: v1

kind: Service

metadata:

name: ingress-demo

namespace: ingress-test

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: ingress-demo

EOF

Okay, create the ingress definition to verify part of the ingress controller. This will create an ingress rule to map requests for that domain to the appropriate service in Kubernetes.

export DOMAIN_NAME="mysub.mydomain.com"

cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-ingress

namespace: ingress-test

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

ingressClassName: nginx

defaultBackend:

service:

name: aks-helloworld

port:

number: 80

rules:

- host: $DOMAIN_NAME

http:

paths:

- backend:

service:

name: aks-helloworld

port:

number: 80

path: /hello-world-one

pathType: Prefix

- backend:

service:

name: ingress-demo

port:

number: 80

path: /hello-world-two

pathType: Prefix

EOF

Notice: how there’s an annotation of nginx.ingress.kubernetes.io/ssl-redirect: "false". This will ensure that if we ever assign a TLS certificate to the ingress definition, NGINX won't start re-routing http traffic to https. This is important for us since we are using an Application Gateway that will terminate SSL and we want this behavior to be enforced by the Application Gateway, not the ingress controller. Otherwise, it might mess up with the health probes from the Application Gateway to the Kubernetes cluster.

Testing the ingress controller from a test container

Now, let’s launch a pod to validate the configuration.

kubectl run -it --rm aks-ingress-test --image=debian --namespace ingress-test

You will be inside the container at this point. Then install curl, we’re going to use curl against our ingress controller.

apt-get update && apt-get install -y curl

Once curl is installed on the container, then let’s make sure that our ingress is working.

curl -L -H "Host: ingress.gaunacode.com" http://10.0.0.100

Notice how we’re changing the Host header to ensure our ingress route is used. We haven’t created an external DNS record or configured the application gateway yet.

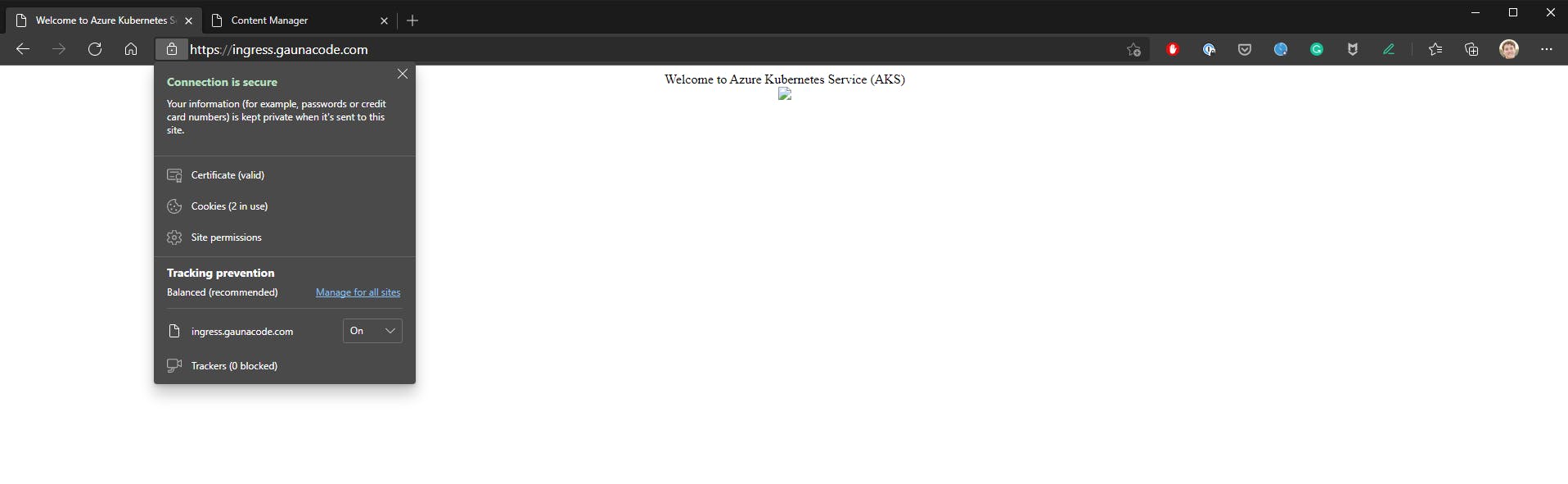

If everything works well, you should see the HTML with “Welcome to Kubernetes”. That’s one of our sample applications.

root@aks-ingress-test:/# curl -L -H "Host: ingress.gaunacode.com" http://10.0.0.100

<form id="form" name="form" action="/"" method="post"> Welcome to Azure Kubernetes Service (AKS)

Preparing an Application Gateway

If you don’t have one, here’s how to create a Standard_v2 application gateway. It pre-configures some aspects like the backend. It uses the private IP of the ingress controller.

az network public-ip create -g rg-appg-ingress-test -l eastus -n pip-appg-ingress-test --sku Standard

az network application-gateway create --name azappg-appg-ingress-test -g rg-appg-ingress-test -l eastus --sku Standard_v2 --public-ip-address pip-appg-ingress-test --vnet-name vnet-ingress-test --subnet appg --servers 10.0.0.100

Configuring the Application Gateway (Manually)

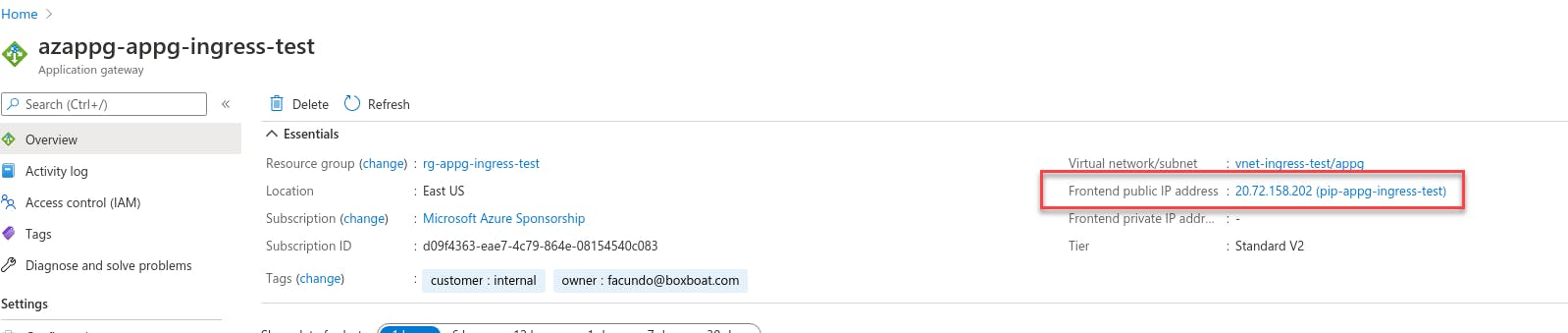

Next, grab the public IP of the application gateway and create an external DNS record.

In my case,

[A record @ ingress.gaunacode.com] -> 20.72.158.202

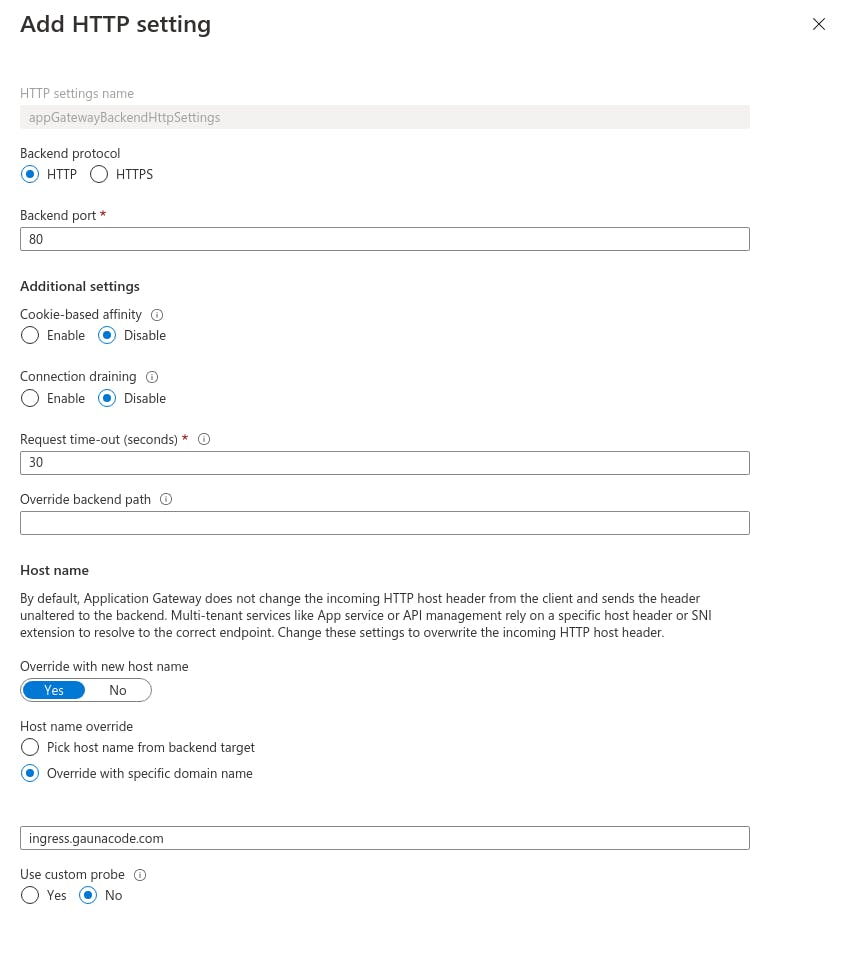

Modify the default HTTP settings and override the hostname. This will ensure that our ingress rule is used in the NGINX ingress controller.

Verify that ingress works. I’m my case, http://ingress.gaunacode.com. HTTPS should not work yet.

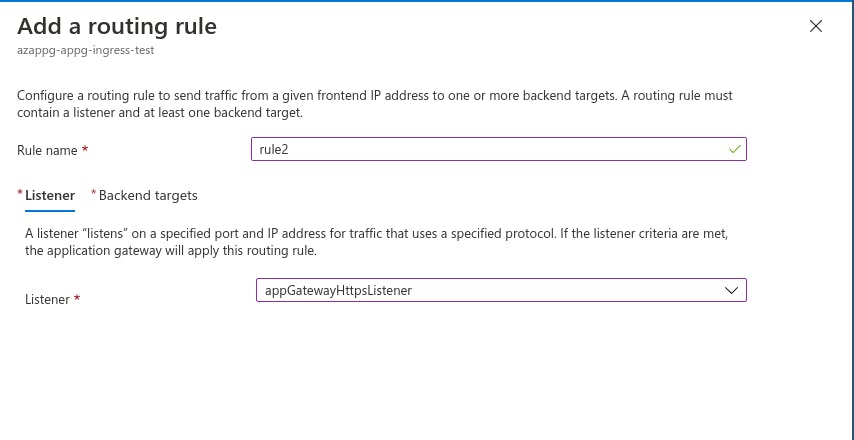

Create an HTTPS listener. I am using a trial certificate for the ingress.gaunacode.com domain.

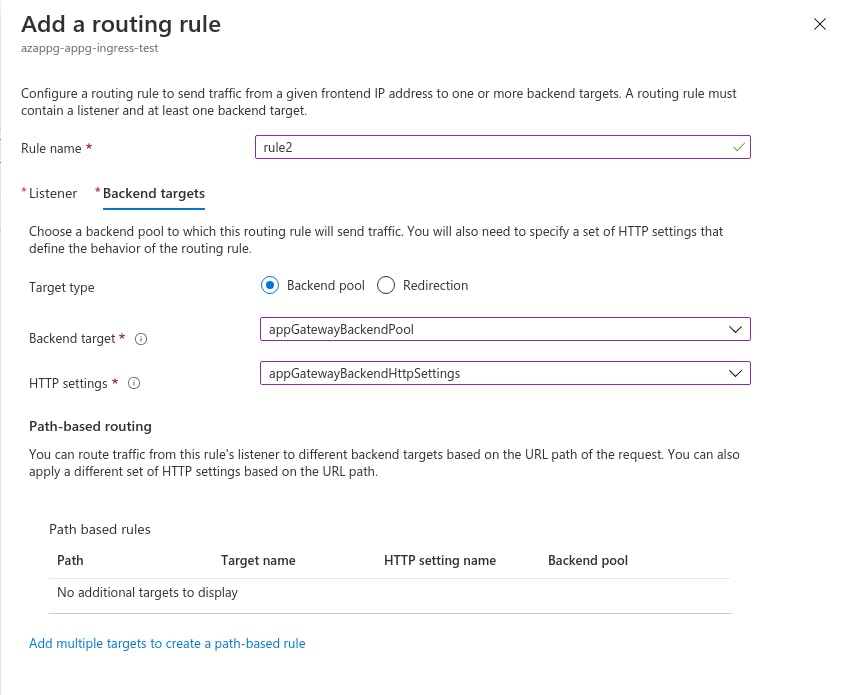

Next, create a “Rule” to tie the HTTPS listener to the backend.

Then the “Backend Targets”

Once the “Rule” is created, then the App Gateway should accept traffic from the public IP, through the HTTP listener, tied to a “backend” using the Rule and http settings. The App Gateway creates a new connection to the NGINX ingress controller through a private static IP and overriding the “hostname” so that the Ingress rule kicks in.

That’s it! Hope that helped.